MLOps: Why You Need It in Your Organisation – Part 1

Are you just starting to put your machine learning models into production? Or you already have some machine learning models in production and are looking for scaling your results? Enter MLOps!

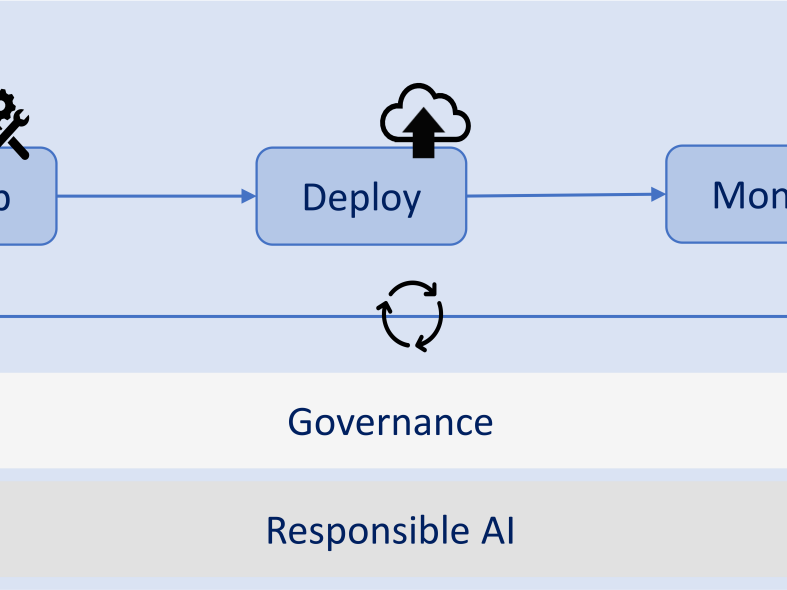

This blog post is the second part of a two-part blog post series on MLOps principles. In the first part, I introduced MLOps and explained challenges and solutions on developing an ML model and deploying it to production. In this second part, I will explain different aspects of life cycle management of your ML models and conclude this blog post series.

While you might think pushing into production is the final step of operationalising ML models, it is really just the beginning of the story. Monitoring it – making sure that it is doing what it is supposed to do, when it is supposed to do it – is essential. A key consideration is model performance. You need to monitor this closely because, generally, the performance of your ML model degrades over time and you need to ensure you notice when the degradation is severe enough to require a model update or replacement. There are many possible reasons for this performance degradation in an ML model, but a main reason is data drift, i.e., your train data is not representing your test data anymore (that is, the information upon which your model relies no longer reflects the reality of the data upon which you are using it). The underlying reasons vary but range from a change in your clients' behaviour to a technical change in the schema of data you are collecting.

This might not cause a lot of extra work if you already have a Continuous Training (CT) process in place. In that case, using a triggering functionality, you can automatically train a new model and push it into production. However, CT process is usually only applicable to the simpler cases of drift where you only need to retrain the same ML model (with the same architecture) using fresh updated train data. For scenarios in which you have a new business question, there is a fundamental behaviour change or new data becomes available, the architecture of your ML model must also be updating – leaving you with the manual update (actually redevelopment) by a data scientist. In other words, if you do not have a CT process or if a CT process cannot help you with model update, you need to ask your data scientists to rebuild (retrain) the model and deploy it to production.

As I pointed out a couple of times, different competencies are essential to the well-functioning MLOps process.

This means for a finely tuned, efficient MLOps process you need a multi-skilled team of individuals who can respectfully and effectively communicate across perspectives.

Having highlighted challenges in the end-to-end MLOps methodology, let me recap. ML models are built on both data and code, making their life cycle management even more challenging than that of software. It means putting version control not only on the code and algorithm, but also on the data. Constantly changing data drives the need for a more dynamic life cycle and increases the importance of putting in place a proper monitoring procedure to decide when to retrain or replace the ML model.

Beyond the technical and process challenges, ML models require multiple people with different skills and perspectives to work with each other, which emphasises the importance of having a good communication channel. This must happen in detail – the same word might have different meaning for them. As an example, performance might mean the accuracy of the model for a data scientist, the business value the model brings to the organisation for a business expert, the speed of calculation to a software developer, and, for a data engineer, it might mean how fast the model or pipeline is.

In this blog post series, I tried to shed some light on the core principles of MLOps and highlight things to consider when you use, maintain, and derive real value from ML models. Of course, there are other complexities and considerations in life cycle management of ML models that I did not discuss in this post, e.g. governance and Responsible AI. I leave these each to their own, dedicated, blog post.

I would like to thank Diane Reynolds, Manne Fagerlind, and Julatouch Pipatanangura for their valuable comments and suggestions that led to improvement of this blog post series.

Are you just starting to put your machine learning models into production? Or you already have some machine learning models in production and are looking for scaling your results? Enter MLOps!

SEB has over 4 million customers, 15 000 employees more than 200,000 shareholders and has a proud heritage of being in the banking industry since 1856. To make sure we reach our goals of delivering world class service we have thousands of supporting IT services

In the last blog post from AIOps we explained why we are focusing on AIOps at SEB. Now we'd like to dig into what we have worked on previously.